For more than a decade, one promise has dominated SEO conversations: “Rank faster on Google.”

Every year, new articles, tools, and shortcuts repeat the same idea, tweak a few things, follow a checklist, and rankings will arrive quickly. Observations drawn from long-term practical analysis show that these assumptions no longer hold. This applies specifically to modern ranking systems, not earlier eras when short-term or manipulative techniques could still produce results.

In 2026, this promise is no longer just unrealistic; it is fundamentally misleading.

Not because search engines are hostile, but because how ranking works has changed quietly, structurally, and irreversibly.

This article explains why ranking fast is a myth in 2026, why some outdated pages still rank anyway, and what actually works now if you want sustainable visibility across search engines and AI systems.

The insights in this article are not based on isolated experiments or short-term ranking changes. They come from observing how search visibility behaves over long periods across different types of websites, content structures, and update cycles, including how pages gain, lose, or retain trust over time. Rather than focusing on individual tactics, this analysis reflects patterns that emerge only after repeated exposure to how modern search systems, particularly those led by Google, evaluate consistency, reliability, and replacement risk across both traditional results and AI-generated answers.

About the Methodology

The methodology behind this analysis is qualitative and longitudinal rather than experimental or tool-driven. Instead of relying on short-term tests, isolated case studies, or single-metric correlations, it is based on observing recurring patterns in how search visibility evolves across different content types, site structures, and competitive contexts. This includes how pages move through evaluation phases, how trust expands or contracts after changes, and how consistency, internal coherence, and user satisfaction influence long-term authority. The approach reflects how modern large-scale search platforms, particularly those led by Google and AI-driven answer engines, assess reliability as an accumulated outcome shaped by continuity, behavior, and replacement risk, rather than as a short-term ranking signal.

The Problem with the Idea of Ranking Fast

The phrase ranking fast is built on assumptions that once made sense, but no longer describe how AI-integrated search systems work.

It assumes that:

- Search engines evaluate pages in isolation

- Rankings are decided quickly after publication

- New content is judged primarily on optimization signals

None of these assumptions holds in 2026.

Modern search systems, led by Google, do not treat pages as standalone assets. A page is no longer evaluated only for what it says, but for where it fits, what it reinforces, and whether it remains consistent over time.

This shift applies equally to ranking algorithms and AI-driven answer systems, which increasingly evaluate sources as part of broader knowledge structures rather than isolated documents.

Ranking is no longer a moment. It is a process of validation.

When a new page is published, search systems do not ask: “Is this page optimized well enough to rank?”

They ask: “Is this source likely to remain reliable for this category of knowledge?”

That question cannot be answered instantly. It requires observing:

- Whether the site explains related topics consistently

- Whether terminology stays stable across pages

- Whether future content contradicts or reinforces earlier ideas

- Whether users repeatedly trust the source for similar questions

Optimization signals still matter, but they function as entry requirements, not decision-makers.

In 2026, ranking is less about passing a checklist and more about earning eligibility over time. Pages are not promoted because they are new or well-optimized. They are promoted when the system becomes confident that replacing them later would be unnecessary.

This shift is subtle, but it changes everything. Ranking fast assumes a race. Modern search operates more like a peer review. And peer review, by design, does not move fast; it moves carefully.

Why Old, Outdated Pages Still Rank (Even in 2026)

If ranking fast is a myth, a natural question follows: Why do some 2–3-year-old SEO articles still rank, even when the advice is outdated? The answer is not freshness. It is inertia.

Ranking inertia explained:

Once a page:

- Has ranked consistently

- Has satisfied users historically

- Has not been clearly replaced by something better

Search engines treat it as stable knowledge. They do not downgrade content simply because:

- The year has changed

- New tools exist

- Experts disagree

Unless user behavior signals break, inertia protects old winners. This is why outdated advice can survive, and why copying it does not work.

Why Ranking Fast Advice Keeps Failing New Sites

Most modern SEO advice fails for a simple but critical reason: it attempts to skip trust formation.

Much of today’s SEO content still operates on an outdated belief that visibility can be accelerated primarily through activity, such as publishing more, optimizing harder, or reacting faster than competitors. In earlier eras, that approach sometimes worked. In 2026, it reliably backfires. These actions introduce instability, which delays trust formation rather than accelerating it.

The most common patterns are as follows:

- Publishing many posts in a short time to signal freshness

- Aggressively optimizing titles and headings to match perceived intent

- Chasing trending keywords without topical grounding

- Rewriting or mimicking what already ranks, assuming proximity equals eligibility

On the surface, these actions appear productive. From the perspective of modern search systems, they introduce instability.

In 2026, rapid publishing and aggressive optimization no longer signal authority. They signal uncertainty.

Search systems, particularly those led by Google, now assume that genuinely authoritative sources do not need to rush. They tend to explain before they expand, and they remain consistent before they scale. When a new site publishes heavily, pivots topics quickly, or mirrors existing winners too closely, it creates a pattern that systems cannot yet trust.

As a result, these behaviors do not accelerate rankings, but they trigger extended evaluation.

This does not contradict Query Deserves Freshness (QDF). Speed still matters for time-sensitive information, but only when supported by an already trusted domain and stable source.

The Probation Phase: Most Sites Don’t Realize They’re In

Modern search does not immediately reward new sources. It observes them. This observation happens in stages that resemble probation more than competition:

- Consistency is observed: Are concepts explained the same way across pages?

- Limited queries are tested: Long-tail, low-risk searches are used to measure fit

- User satisfaction is measured: Not just clicks, but engagement stability over time

- Trust is expanded gradually: Only after patterns remain unbroken

This process is intentionally slow. From the outside, it looks like nothing is happening. Internally, systems are deciding whether the source is worth integrating more deeply into results and AI-generated answers.

When site owners attempt to “force” rankings during this phase by changing titles frequently, rewriting content, or pivoting strategies, they unintentionally reset the evaluation clock. The system is no longer confident that it is observing a stable source.

Why Acceleration Tactics Now Cause Delays

The irony of modern SEO is this: The more aggressively a new site tries to rank, the longer it often takes.

That is because ranking systems are no longer rewarding effort. They are rewarding predictability, coherence, and patience. Sources that demonstrate restraint by staying topically focused, publishing deliberately, and allowing content to mature tend to progress through trust phases faster than those that constantly optimize for short-term movement.

In 2026, visibility is not granted to the loudest source. It is granted to the one who proves it will not need to be replaced. And that proof cannot be rushed.

What Google Actually Measures in 2026 (Not What Tools Show)

SEO tools still focus on:

- Keyword difficulty

- Backlinks

- Page optimization scores

But search systems increasingly prioritize signals that tools cannot fully measure:

- Topical consistency across pages

- Conceptual alignment (no contradictions)

- Internal knowledge structure

- Historical user satisfaction

- Entity reliability over time

This is why two pages with similar optimization can perform very differently. One fits into a coherent knowledge system. The other is just another article.

The Role of AI in Killing the “Fast Ranking” Myth

Generative engines and AI-driven search experiences did not replace SEO; they exposed weak SEO. AI systems:

- Prefer explanations over tricks

- Favor sources that define concepts consistently

- Avoid pages that exist only to rank

This is why many pages still rank in blue links but disappear from AI Overviews. Fast content may rank briefly. Structured knowledge is remembered.

AI systems favor sources that function as references—sources whose explanations remain valid even when individual tactics or tools change.

Large language models (LLMs) used in search interfaces evaluate sources differently than ranking algorithms; they prioritize internal consistency, definitional clarity, and conceptual continuity over tactical optimization.

What Actually Works in 2026 (And Why It’s Slower, But Stronger)

Ranking in 2026 is no longer a question of speed. It is a question of eligibility. Eligibility applies not only to rankings, but to whether a source is remembered, reused, or cited by AI systems over time. Once a source crosses this eligibility threshold, visibility tends to expand across multiple interfaces rather than remaining confined to a single results page.

Modern search systems are not trying to surface the newest or most optimized page. They are trying to identify sources that can be safely relied upon over time. This shift explains why some sites appear to move slowly at first, and why, once accepted, they become difficult to displace.

Here is what consistently works now.

1️. Build conceptual authority before chasing keywords

In earlier eras, keywords acted as entry points. In 2026, they act more like labels applied after trust is earned.

Search systems increasingly reward sites that demonstrate:

- Depth of explanation, not surface coverage

- Consistent terminology across multiple pages

- Clear relationships between ideas, concepts, and definitions

When a site explains a topic deeply, uses the same language to describe it everywhere, and connects that explanation to related concepts, it begins to look less like a collection of pages and more like a knowledge system.

This is why glossaries, pillar pages, and explanatory content compound over time. Each new page does not start from zero. It reinforces an existing conceptual framework, making the entire site easier to understand, evaluate, and trust.

In 2026, authority is not built by ranking for many keywords. It is built by being internally coherent.

Many sites appear to ‘win’ briefly by exploiting timing or gaps, only to lose visibility once systems reassess long-term reliability.

2. Let time validate you instead of fighting it

Trust cannot be shortcut — and modern systems are designed to detect attempts to do so.

Pages that perform best over time tend to share three traits:

- They remain structurally and conceptually stable

- They are reinforced by internal links from related content

- They are not constantly rewritten to chase short-term movement

Stability matters because search systems measure reliability longitudinally. They observe whether a source:

- Stands by its explanations

- Maintains consistent viewpoints

- Evolves carefully instead of pivoting frequently

Aggressively optimized pages often peak early and decay quickly. Stable pages age better because they give systems confidence that the information will not need to be replaced in the near future.

In 2026, time is no longer an enemy of ranking. It is one of its strongest validators.

3️. Optimize for understanding, not ranking

One of the clearest shifts in modern search is this:

systems increasingly reward clarity of explanation, not procedural completeness.

Content that performs well now tends to help users understand:

- Why something works the way it does

- Why certain approaches fail

- How systems behave under different conditions

This type of content reduces ambiguity. It answers not only what to do, but why it matters. As a result, it satisfies a broader range of users – beginners, intermediates, and decision-makers alike.

Step-by-step lists may still rank temporarily, but they are fragile. Explanatory content survives because it remains relevant even as tools, tactics, and interfaces change.

This is why pages built around understanding tend to survive algorithm updates. They are aligned with how both humans and AI systems learn.

- Think beyond one search engine

In 2026, visibility is no longer confined to a single results page. Search now happens across multiple surfaces:

- Traditional blue-link SERP

- AI-generated answers and summaries

- Multi-engine discovery environments

Optimizing for only one interface creates fragile visibility. A page may rank briefly in one system while remaining invisible everywhere else.

This is where Generative Engine Optimization (GEO) becomes relevant; not as a tactic, but as a recognition of how authority is now distributed. GEO focuses on being understandable, consistent, and reference-worthy across systems, not just competitive in one.

Ranking fast in one place is temporary. Being trusted across systems is durable. That durability is slower to build, but once established, it compounds.

The Real Timeline Nobody Likes to Hear

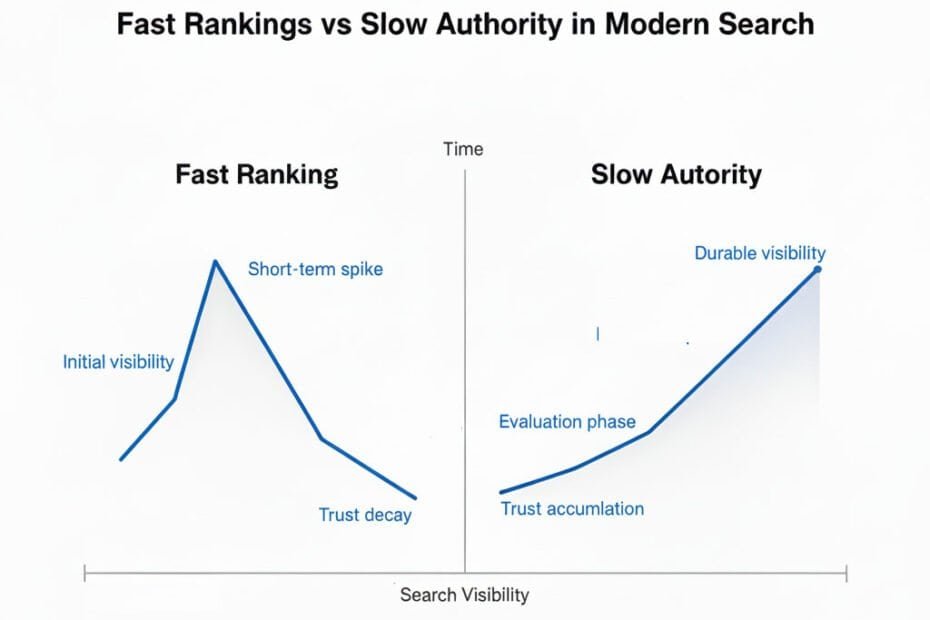

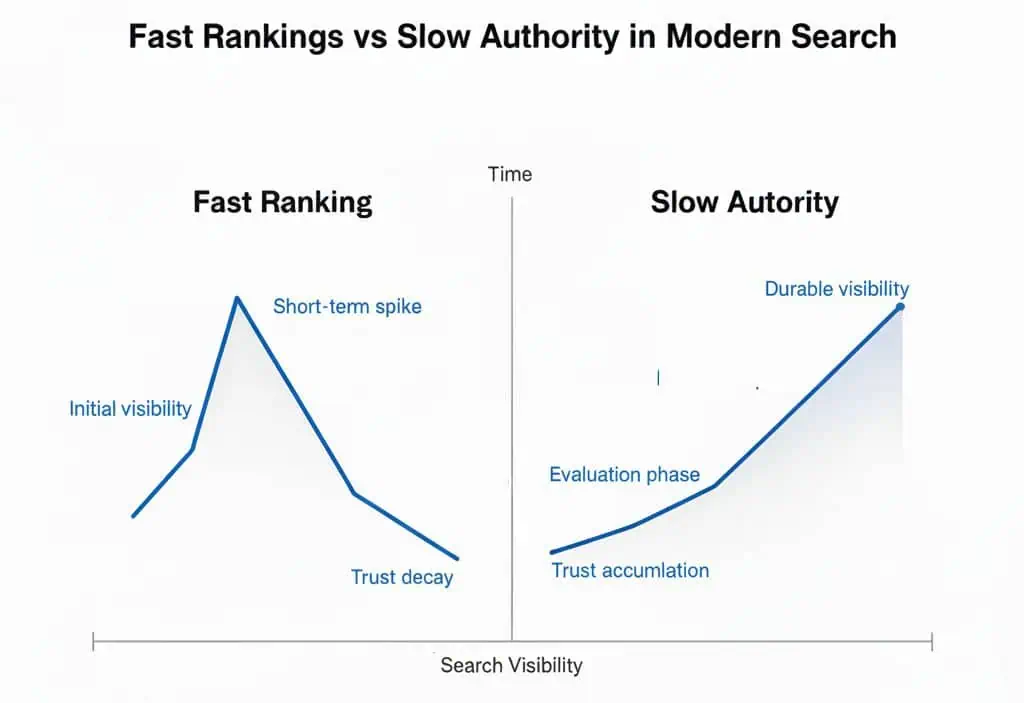

Here is the uncomfortable truth most SEO discussions avoid:

- Fast rankings are often temporary

- Slow authority is long-lasting

This is not a philosophical statement. It is an observable pattern in how modern search systems, led by Google, evaluate, promote, and eventually replace sources.

Pages that rise quickly usually do so because they exploit a temporary gap:

- A trending query

- Low competition at a specific moment

- A tactic that has not yet been saturated

When those conditions change, the rankings fade — often quietly, without warning. Authority does not behave that way.

The Strategic Advantage of the Evaluation Clock in 2026

The contrast between short-term optimization and long-term authority can be summarized as follows:

| Old Habit | 2026 Shift |

| Keyword Chasing: Targeting high-volume terms in isolation. | Topical Grounding: Explaining “why” and “how” before scaling volume. |

| Constant Rewriting: Updating titles to “force” a rank change. | Conceptual Stability: Maintaining consistent definitions across the site. |

| Activity Over Trust: Publishing 20 posts a week to look “active”. | Restraint & Maturity: Allowing content to age and gain longitudinal user signals. |

In 2026, search systems are not racing to reward novelty. They are optimizing for replacement cost.

Before promoting a source widely, systems effectively ask:

“If we show this page to millions of users, will we need to undo that decision soon?”

Sources that spike quickly tend to raise that risk.

Sources that grow slowly reduce it.

That is why the sites that ultimately win in 2026 usually share the same characteristics:

- They did not experience sudden visibility spikes

- They did not chase every new trend or update

- They built clarity before volume, not the other way around

Their growth looks uneventful at first. But once trust crosses a threshold, it compounds.

Final Thought: Replace the Question Entirely

Instead of asking: “How can I rank faster on Google?”, ask: “How do I become a source Google doesn’t want to replace?” That question changes:

- How you publish

- What you publish

- How often do you change things

- How AI systems interpret your site

In 2026, ranking fast is not the goal, but being trusted is.